Hyperion Philosophy

Hyperion is an analytics platform merged with an intelligence data lake, used for Cyber Threat Intelligence and Security Response use cases. The following is a description of the idea behind Hyperion which is helpful if you want to contribute to the project - understanding its philisophy may shed some light on certain design choices.

Hyperion Design Assumptions

When designing Hyperion, the following assumptions were made in the design phase:

-

We expect a lot more writes than reads - Hyperion, similar to something like Splunk, receives data from a wide variety of sources, and analysis of this data is usually either:

- Reliant of further data writes - entity enrichment use cases

- Not extremely computationally demanding - periodic searches for alerting purposes, or analysts exploring the graph

-

The graph is going to be forever-growing - Hyperion’s biggest selling point is storing a lot of cyber telemetry for a very long period of time to provide correlation capabilities

-

Our graph is very dense - an entity is almost never going to exist without edges going in/out of it, as the whole point is to have an strongly interconnected graph to allow for relationship exploration.

-

We have a broad data model that will continue to expand - compared to more traditional applications of graphs, Hyperion has a lot of different entity types - IPs, domains, accounts, ProcExec’s, files etc.

- This means that inconsistent schema can quickly balloon to a massive problem if not managed correctly.

-

Edges don’t store semantic data, nodes do - we don’t want to store things like Netflow as edges between 2 nodes, instead we want to store it as metanodes, for example: 2

IPnodes and aNetflowmetanode. This allow for querying:- Traffic from IP A to IP B

- But also the whole

Netflowdataset without running into issues of querying edge properties. - It also allows to add

ProcExecnodes to the pattern - representing the process which initiated the flow.

-

As a follow-up, 2 nodes should be connected by a single edge - since we try to represent facts as nodes, and relationships between facts as edges, in most cases 2 nodes should be only linked by a single edge (for example, a

PERSON_OWNS_HOSTwithout an additionalRELATED_TOedge or something along those lines) -

We do not expect heavy graph algorithms to be used on the entire dataset - since our data model is so broad, data science’y things like community detection, clustering etc. are not going to be performed on the whole dataset - instead they will be performed on extracted segments of the graph.

- In the future such analytics can be carried out by GPU-accelerated systems, rather than the DB engine itself.

All of the above essentially means that we’re architecting a system which:

- Is scalable and performant for heavy-write operations

- Is capable of basic edge traversal (i.e. supporting Boolean logic)

- Has good support for in/out flows of data (Kafka support, HTTP API + Python/Go clients)

Hyperion Data Model

Hyperion is mostly a data platform with some added bits on top of it, so the most crucial element of it is the data and the way that its modelled. The following section describes the principles behind Hyperion's data model (i.e. how data is stored).

Generalised entity types

In many databases you'll see different entity types for different variaties of the same general object, such as OktaAccount, ZoomAccount etc. where each type is designed to store specific properties associated with that account. On the other hand, Hyperion treats all Accounts as the same entity and provides specific properties on the node that allow to further specify the account type.

The reason behind these abstractions is the ability to pivot between entity types that may represent the same general archetype of objects without getting too lost in having to do 20 different JOINs - at the end of the day, if an analyst is pivoting from a Person to Account they want to see all the accounts belonging to that person and don't want to spend 20 minutes writign JOINs for ZoomAccount, OktaAccount etc. (assuming they know all the available entity types in the first place!)

Where traditional databases may store data like this:

Hyperion would instead use:

Normalised entities

This one is pretty simple: an entity can only exist once. The entity is also expected to fully describe what it's representing - if it's representing a threat actor, it should also include the name of the reporter for it (so you can differentiate between what vendor X calls Lazarus and what vendor Y calls Lazarus, as they may not be the same)

Correlation, correlation, correlation

All entities within the platform must contain links to any other entities they're related to, this is especially vital for any composite entities. This data should be (to the extent possible) modelled at ingest, rather than being postponed as a "future TODO". Remember, garbage in, garbage out - you cannot expect efficient pivoting if there's no relationships to pivot on.

Composite entities are made up of smaller components, like an LogonAttempt being made up of:

- The

Accountthat made the logon - An

IPv4node from which the logon was made - A

UserAgentused in the logon - Any other

dtHashes,DeviceFingerprints etc. TheLogonAttemptcan only be considered unique if the combination of these components is truly unique.

Normalised field names

Since we've already established we want our data schema to be repeatable and predictable when it comes to entity types, we also want the same to be true for any properties assigned to those entities. And so, all entities are expected to follow the same naming scheme.

Some examples of this can be found below, but bear in mind this list is not updated, so make sure to review the existing Hyperion data schema to make sure what the "latest" names are:

value(or_id) - the "value" of whatever the object relates to, such as the value of: anIPv4,SHA256,EmailAddress,FileNameetc.desc- description of the entity, if necessaryurl- URL where the entity can be located. Useful forNewsArticle-type nodesf_seenandl_seen- the first and last seen timestamps of when the entity was seen across all possible data sources currently in the platform. This is meant as a general - we've seen this IP for the first time 10 years ago in the random pDNS resolution - and not a more specific - we've seen this IP 10 days ago on one of our assets. The latter needs to be expressed with something more tangible like afirst_sightingproperty or ideally a dedicatedNetFlownodecreated_at- the time the entity was first created in the platform. This property should be set automatically on creation of all entities and should be read-only

Fact-based relationships

All relationships in the platform are expected to be based on factual information, not analytical conslusions. This is based described on an example: imagine 2 people (Alice and Bob) are obviously drelated to one another. You may be tempted to model this relationship like this:

This makes sense to you at the time you're creating this relationship, but in a years time when someone else is looking at the same relationship it may not be as obvious to them - why are they related? What evidence do we have about that? Thus, all relationships are expected to only represent the provable facts, rather than analytical observations.

In this example, this can be modelled as any of the following:

Simple 1-hop relationship

Or 2 hop relationships

Keep in mind in this case the HOST_SEEN_WITH_IP would need to be appropriately timestamped with f_seen and l_seen properties to ensure relevancy of the overlap in IP addresses.

Obviously analytical conclusions are just as important as facts, after all, all the data in the world doesn't mean anything if it's not properly analysed and assessed. To achieve that, these relationships can be representated in the form of "tags" or dedicated analytical nodes (such as HyperionCase or HyperionInvestigation nodes).

Note: at the moment the latter do not exist, yet.

How Hyperion came to be

Hyperion was greatly inspired by another intelligence platform, Vertex Synapse, created by the intel analysis team at Mandiant (at the time it was codenamed Nucleus) to facilitate research for the APT1 report, which became the cornerstone of Cyber Threat Intelligence as a discipline.

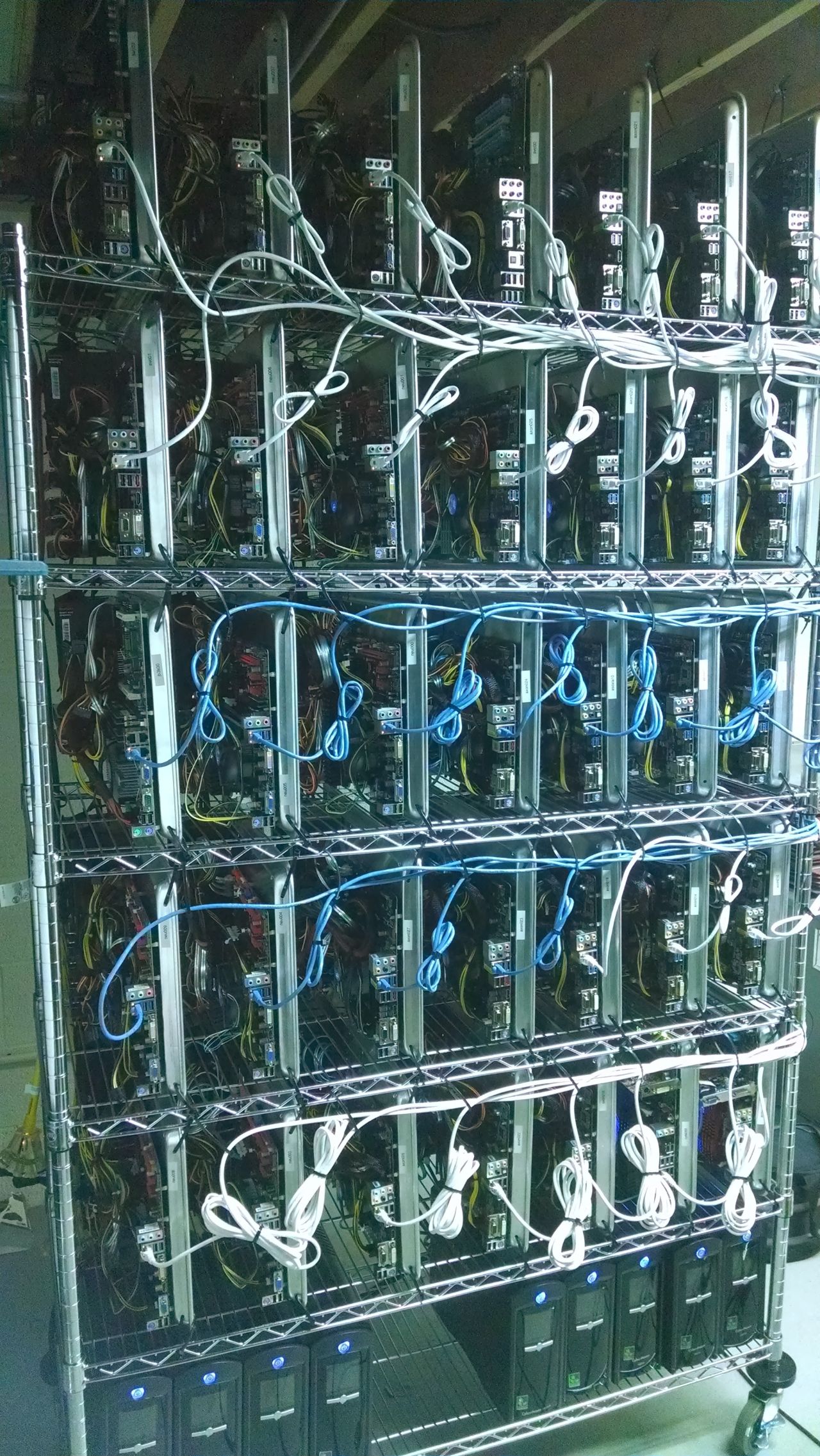

Fun fact, this is how the first version of Nucleus looked like:

Vertex Synapse is technically a "TIP" (Threat Intelligence Platform), but unlike most vendors from the field (Anomali, ElecticIQ etc.) they chose not to tailor towards most businesses with nice-looking UIs and pewpew maps, instead focusing on highly technical research teams who needed a way to store, correlate and analyse the collected threat data from their clients. So, they created a database with a strongly defined data model (where all entity types are already defined for the user) and a bunch of "connectors" that allow to enrich data already in the platform.

Such approach has a number of benefits, but in relation to Hyperion the strongest influence is that all data within the platform is structured in a consistent and predicatable way, which allows for more confidence when pivoting from entity type to entity type.